|

This section describes the prevention of search engines and

site grabbers from locating your images.

| 8. |

Search & Spider Prevention |

|

|

It's pointless trying to protect images if everyone one of then can

be located remotely by search engines spidering from your home page. So

the first thing to do before anything else, is ensure that your images

do not turn up in the results of any search engine results for images.

Theoretically the use of a robots.txt file can be used to tell search

engines not to spider certain files and folders. This file can be

created by using Notepad and placed into the root of your web site. The

fist thing most search engines look for on your web site is a robots.txt

file before proceeding. The following describes the contents of a

robots.txt file that tells them not to use the "images" folder in their

indexing.

User-agent: *

Disallow: /images/

The asterisk (*) means that it applies to all search engines. An

alternative is to list each search engine but that's overkill and you do

really need to include them ALL. Ok, now that part is done, if you

really want to prevent ages from showing up in search results, don't

rely on the robots.txt file at all because not all search engines are

friendly and if anything, providing a list of your sensitive folders to

all and sundry is about the least cool idea of all.

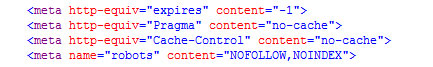

The following tags can be used to prevent pages from being indexed and

indexed though:

The first rule to employ is... do not provide a clear path from

your home page to the images concerned. Ok, so that's not easy to do

without some clever scripting because unless your protected pages are

located within a member only section that requires a log-in, then it

will be most difficult not to link your home page to your protected

pages. Otherwise your visitors may never find them.

For this obscurity many recommend encrypting the html and web pages, but

that's useless because site grabbers and search engines use web browser

resources to load the pages for spidering. But some tricks can be

employed to sort the spiders from the web browsers by detecting what

they are supporting. For example not many search engines have JavaScript

enabled and almost none will support cookies.

A huge trap for search engines is to write a cookie on page load to

record the session ID of the user. Every web server tracks users by

assigning them a unique session identifier. That ID remains current

until the end of the session when they leave the site or a time out

after a period of inactivity. Then as each page loads it first reads the

cookie and checks to see if there is a a match between the recorded

session ID and the current one. If the "visitor" cannot read and write

cookies you have detected them and can now do whatever you like with

them. For example redirect them to an error page, or not show the

content.

The second rule is to not assume that anyone is honest. There are

thousands upon thousands of web servers spidering web sites looking for

all sorts of content to borrow or steal, and some may be using cookie

enabled browsers. However most use an out-of-the-box solution that can

be detected from their user-agent. Ok, so user-agents can be tampered

with but by now you know more about the enemy. For any copy protection

solution to be effective, it needs a combination of things because on

the Internet you are exposed from many different angles.

Some webmasters have devised complex solutions for not only detecting

all manner of search spiders and site grabbers, but can watch them live

to see where they come in and where they go. Pondering about how some

search engines find web pages that are unlinked and only known to you

can be enlightening.

See the

Link Protection and HTML Encryption sections for some more

ideas.

|

|

Return to the image protection techniques list

|

|